A Taste of DevOps(My Tech-Blog)

The landscape of cloud services adoption, especially among startups, has evolved significantly. Companies no longer rush to leverage core cloud services when transitioning workloads to a public cloud environment. Instead, a more strategic approach is being embraced.

Initially, organizations establish a landing zone where they begin by utilizing managed cloud services. This approach often involves hosting a static website to comprehensively understand cloud solutions. Subsequently, the focus shifts towards automating website releases through a CI/CD pipeline. This streamlined process enhances efficiency and accelerates time to market, providing companies with a tangible experience of DevOps practices.

In this blog, I delve into the intricacies of serving a static website on the AWS cloud using CloudFront and an S3 bucket. Key features of this setup include:

- GitHub serves as a centralized source code management platform.

- Utilizing Terraform, a versatile Infrastructure as Code (IaC) tool, to construct the cloud infrastructure, Amazon S3, and CloudFront.

- Implementation of GitHub Actions to automate the CI/CD workflow.

- Integration of HCP (HashiCorp Cloud Platform) as a remote backend for Terraform operations.

- AWS plays a pivotal role as the chosen public cloud provider.

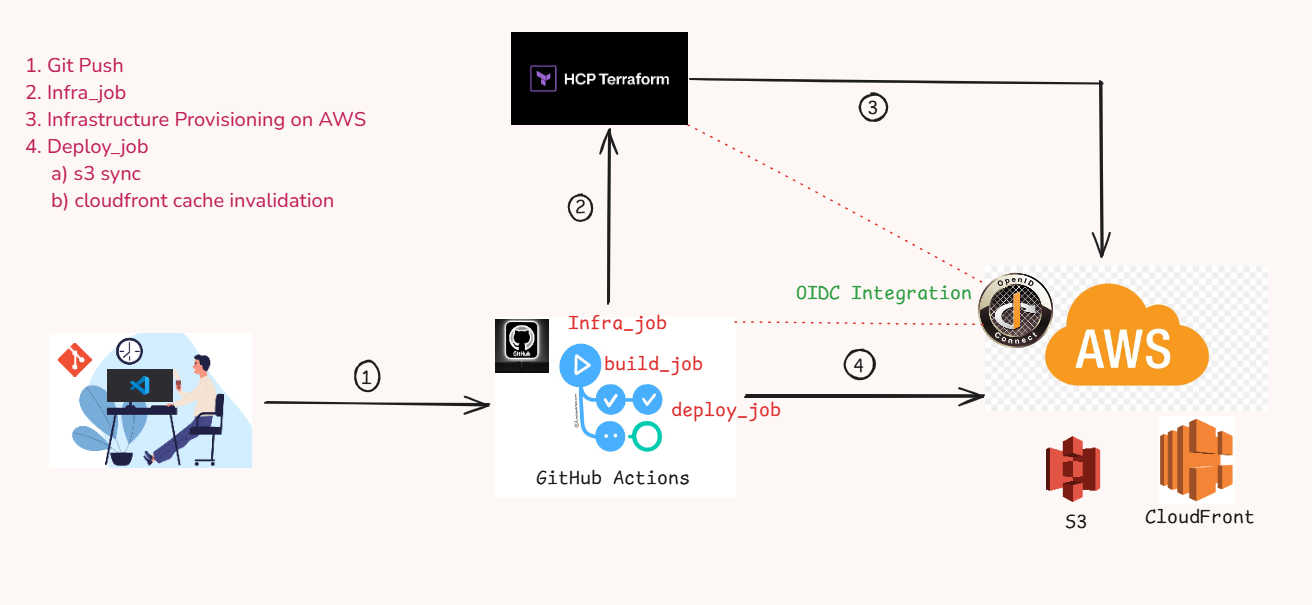

Diagram

Figure1: Overview

A workflow is triggered when a developer pushes code to the main branch. The workflow has three jobs defined:

- Provision the AWS infrastructure based on the terraform directory code changes.

- Build the blog using the static website generator Hugo.

- Update the S3 bucket with the new contents and invalidate the CloudFront cache.

GitHub Repository

Source code can be found in this GitHub Repo

CI/CD Workflow

The infra_job checks out the current repository and executes the Terraform workflow in HCP Terraform . The HashiCorp—Setup Terraform action installs the Terraform CLI on a GitHub-hosted runner. A few placeholders are passed to the GITHUB_OUTPUTS for later use in subsequent jobs.

Intra_job

| |

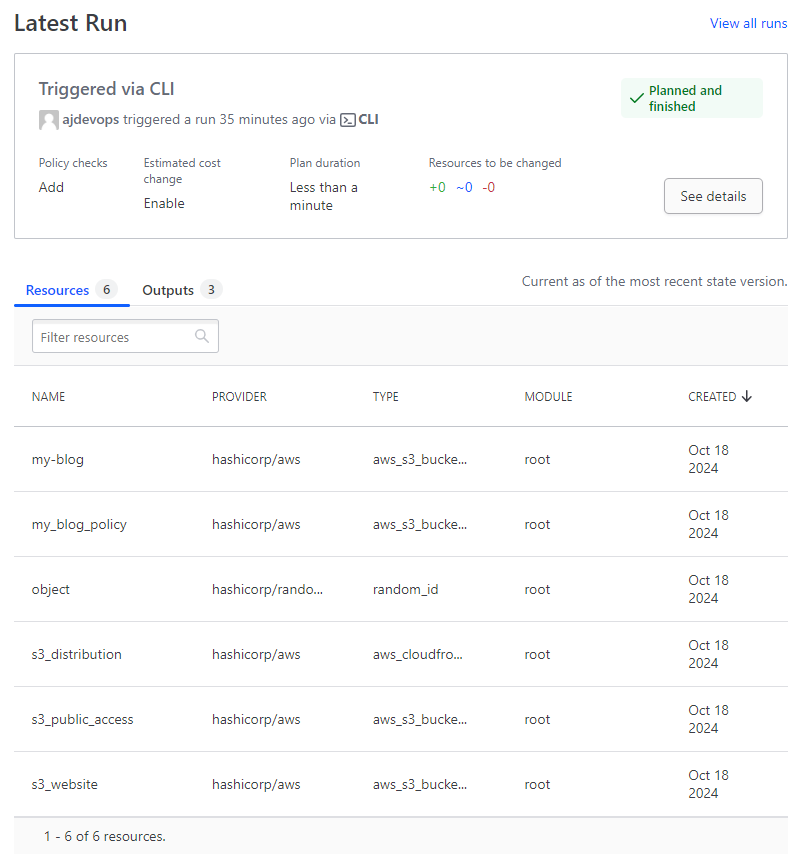

infra_job will create the following resources on the HCP Terraform

Figure2: Resources

Build_job

The build_job is the easiest of all. It uses Hugo setup actions to install and build our website. The build Artifacts are uploaded using the Upload a Build Artifact GitHub action.

| |

Deploy_job

The final job is to publish the website by deploying the Artifacts generated in the build_job to Amazon S3. Here, we use OIDC integration between GitHub and AWS for the GitHub runner to leverage AWS CLI for running s3 sync and cache-invalidation commands.

| |

Workflow Summary

Figure3: Workflow Summary

Hence, all future releases are automated using GitHub actions CI/CD workflow. This setup can be further improved by creating a feature branch and testing changes before merging to the main branch.